WEB All three model sizes are available on HuggingFace for download Llama 2 models download 7B 13B 70B Ollama Run create and share large language models with Ollama. WEB Just grab a quantized model or a fine-tune for Llama-2 TheBloke has several of those as usual That said you could try to modify the. WEB OpenAI compatible local server Underpinning all these features is the robust llamacpp thats why you have to download the model in GGUF file format To install and run inference on. Download the Llama 2 Model There are quite a few things to consider when deciding which iteration of Llama 2 you need. Mac users or Windows CPU users..

WEB The Llama-2-GGML-CSV-Chatbot is a conversational tool powered by a fine-tuned large language model LLM known as Llama-2 7B This chatbot utilizes CSV retrieval capabilities enabling users to. WEB This is an experimental Streamlit chatbot app built for LLaMA2 or any other LLM The app includes session chat history and provides an option to select multiple LLaMA2 API endpoints on Replicate. WEB This chatbot is created using the open-source Llama 2 LLM model from Meta Particularly were using the Llama2-7B model deployed by the Andreessen Horowitz a16z team and hosted on. Customize Llamas personality by clicking the. A self-hosted offline ChatGPT-like chatbot powered by Llama 2 100 private with no data leaving..

Result Fine-tune Llama 2 with DPO a guide to using the TRL librarys DPO method to fine tune Llama 2 on a specific dataset. Result This blog-post introduces the Direct Preference Optimization DPO method which is now available in the TRL library and shows how one can fine. Result The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and. Result In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine. Result This tutorial will use QLoRA a fine-tuning method that combines quantization and LoRA For more information about what those are and how they work..

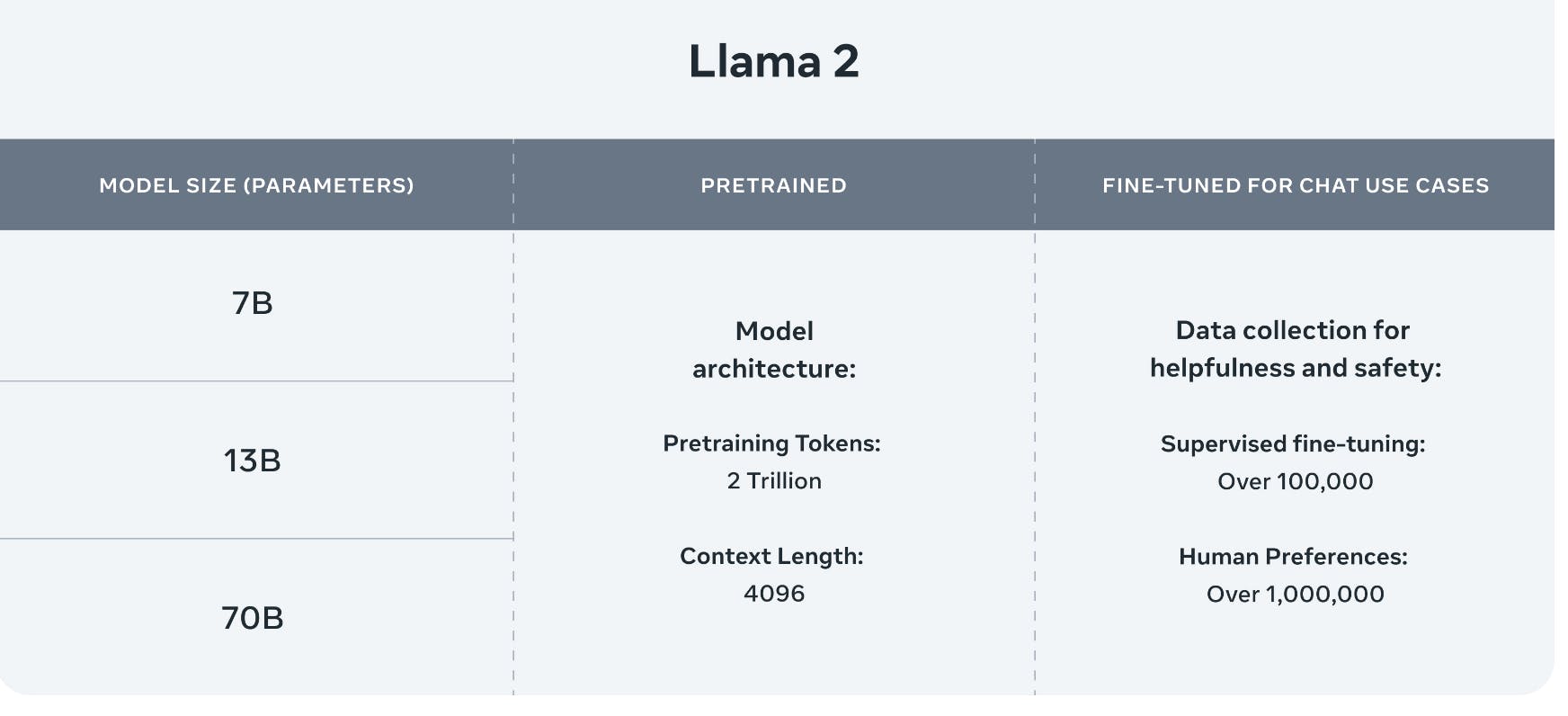

WEB All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1 Llama 2 encompasses a series of. WEB Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B pretrained model. WEB Some differences between the two models include Llama 1 released 7 13 33 and 65 billion parameters while Llama 2 has7 13 and 70 billion parameters Llama 2 was trained on 40 more. WEB The abstract from the paper is the following In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7. WEB Llama 2 70B is substantially smaller than Falcon 180B Can it entirely fit into a single consumer GPU A high-end consumer GPU such as the NVIDIA..

Comments